Four Lessons Learned from Building Microservices

I’ve had my fair share of projects based on microservices architecture. As fun as they are to work on, we fell into some nasty traps, some of which should’ve been painfully obvious. Here are four of them that I could gather from memory.

Services should hide their internal details

A trap I fell into pretty early in my microservices journey was allowing another team’s microservice to access our microservice’s database directly.

Their team was tasked with building a back-office internal tool capable of reading and writing data in all of the other microservices, including ours. Our public GraphQL API wouldn’t suffice, it had very conservative queries and mutations intended only for our public clients. For what this team needed, we would need to add a bunch of administrational operations to our API.

Amid a very tight project schedule and with us thinking it would save us a lot of time, we proceeded with having their service access our service’s DB directly. Yikes.

Not long after that, we realized that we had effectively given up our freedom to make changes to our database’s schema because the back-office tool had now been hard-coupled to it.

Getting things right was painful, but eventually, we got their microservice’s read paths to go through our API and cut off their dependency on our database.

What we learned

Don’t break a microservice’s boundary by allowing another service to directly access one of its components (e.g. the DB). This covertly introduces a new contract that it’ll have to adhere to. A microservice should (aim to) uphold one contract only: its API.

A microservice should hide as many details as possible. You can only easily change components that are hidden from the outside.

Invest early in the infrastructure required for service-to-service communication, i.e. service discovery and machine-to-machine tokens. You’ll need it eventually, and this was a major factor that drove us away from the correct solution because the amount of work seemed significant.

Chatty microservices likely belong together

On what should’ve been a boring Tuesday, some QA reports began storming in that the application was hanging on certain screens. We confirmed that on our observability tool, Instana, where we saw a couple of GraphQL queries taking >30 seconds then ultimately failing.

The distributed trace in Instana revealed an infinite loop of requests as a result of a cyclic dependency between two of our services. We located the bug which was introduced in a recent commit: a new piece of middleware in service A was making a request to service B, which then would make a request to service A, triggering service A’s middleware again to fire off a request to service B, and so on.

We realized we had been lucky to not run into this issue for as long as we did, considering that these two services were already very chatty and had pretty much always been dependent on each other.

As an urgent fix, we reverted the commit and decided to merge the two services into one.

What we learned

Observability tools and distributed tracing save the day. The long alternating sequence of requests in the request trace let us instantly recognize there was a cyclic dependency issue.

Two services being very chatty is a good indicator that they belong together. Most observability tools provide visuals of intra-service communication in your ecosystem that can help you spot which services are communicating excessively.

The best way to avoid cyclic dependency issues is to not have cyclic dependencies. Shallow cyclic dependencies like in our case (A → B → A) are manageable to a certain degree, but a poor design could end up introducing infinite request loops from transitive circular dependencies like A → B → C → A. Aim for no cyclic dependencies and a unidirectional request flow.

Minimize alerting noise. Ideally, an issue like this should have been noticed by us via our monitoring alerts, long before anyone had reported it. We had a Slack channel set up for automated monitoring alerts, but there was too much noise going on in there that we had gotten used to ignoring it. This incident made us take the time to revamp the alerts and be more attentive to the alert channel.

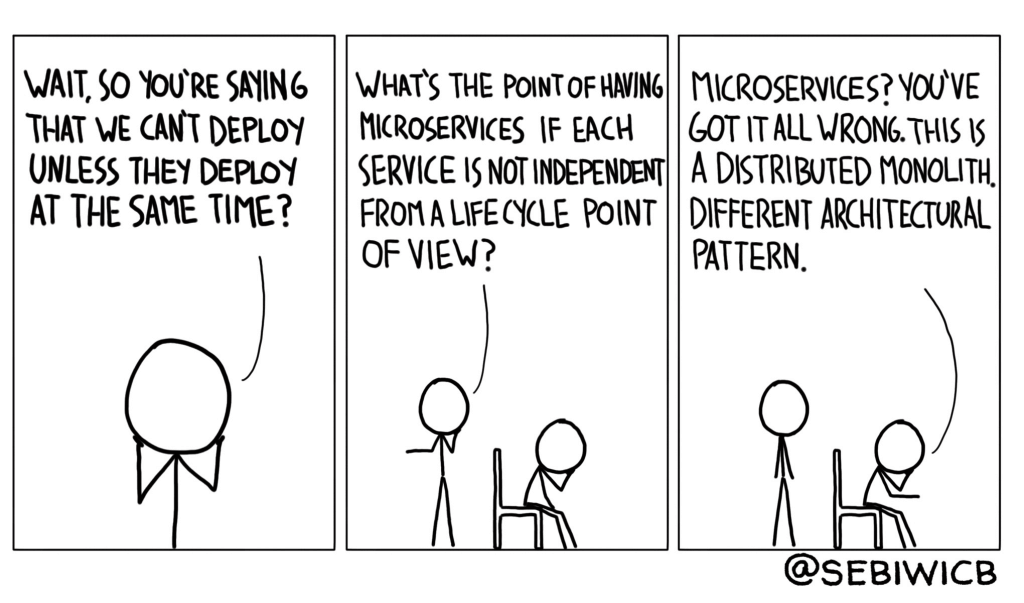

Maintain independent deployability

One of the core principles of microservices is independent deployability. You should be able to deploy a microservice at any time, without having to deploy anything else. Deviating from this rule brings trouble.

In one of my past teams, we had a tradition to gather once a week in a “war room” for mass deployment of a usual set of 6-7 microservices to launch new features. To minimize downtime, these services had to be released in a strictly ordered sequence.

The biggest reason for these coordinated deployments was that teams were allowed to break their API contracts. There were no rules in place to require backward compatibility for every service. We assumed this just came with the territory, accepting it as a normal part of practicing microservices. However, over time the major downsides became very apparent.

If we had messed up one of the services and couldn’t push a hotfix quick, we had to revert all the deployments. This was frustrating.

Allowing microservices to disregard backward compatibility effectively lowered our confidence in the stability of the ecosystem.

Getting many people to sync for the coordinated deployment was difficult since microservice teams usually had their own plan schedules. Also, any hiccup or failure during the process meant a lot of wasted time and productivity.

And, ultimately, it never felt right that teams had to concede their autonomy of deploying their microservices to production.

One of many things we did to address these was to add a rule: microservices must maintain backward compatibility and not break their API contracts. If breaking changes are inevitable, teams must be notified ahead of time and API features must go through a deprecation phase first.

What else we learned

Integrate automated checks in the CI/CD pipeline to report and prohibit breaking changes. We added a step to our pipeline that used static analysis to compare the new API contract to the previous one. Additive changes are OK, but any removal or modification of an API method failed the CI/CD job.

A culture of maintaining backward compatibility did wonders for our sanity and confidence in the system, knowing that you won’t wake up one morning to find out a microservice’s endpoint is now returning a completely new format or even disappeared completely.

Feature flags are a very useful tool to separate code deployment from feature deployment. If you want to deploy a breaking change, you can first hide it behind a feature flag, deploy the code, then switch the feature flag on at your convenience. More on feature flags below.

Adding end-to-end tests has a point of diminishing returns

The above statement admittedly applies to most systems regardless of architecture, but it was especially evident in a microservices project I took part in that had very healthy test coverage. The React frontend was full of integration and Cypress end-to-end tests, whereas the backend services had their own unit and end-to-end tests.

After a certain point, the number of bugs that made it through to staging and production began growing linearly with the number of new features added, even though we had strict measures in place that no code was to get merged without its accompanying tests.

We needed a strategy to complement the tests.

What we did (and learned)

The answer was testing in production, as so nicely explained in Cindy Sridharan’s “Testing in Production, the safe way”. This strategy involves applying post-release techniques to tackle encountered issues, rather than trying to prevent them all pre-release.

What this meant for us, was to crank up our monitoring and work on our knowledge sharing regarding our distributed tracing tools, making sure every developer can find their way around it.

But one of the techniques that proved most effective for us was the usage of feature flags. New feature code was expected to be wrapped in a feature flag, which we would then use to roll the feature out gradually, starting from our small team to the whole company, and finally to the entire userbase (we’ve had great success with LaunchDarkly). We piggybacked on this mechanism for error resolution as well: when our monitoring tools reported critical errors that originated from a particular feature, we could easily switch the feature off to buy us some time to understand the problem and apply a hotfix. This is a great example of how we did testing in production.

Endnotes

Some of the principles and rules I’ve mentioned above are brilliantly explained in the book Building Microservices by Sam Newman, which I’ve had the chance to read recently. It’s a great resource with principles and best practices that will save you from the many pitfalls of microservices. The second edition has been released and I highly recommend it!

What microservice lessons have you learned in your experience? Could you relate to any of the lessons above? Tweet me @denhox – I’m very curious to hear your story!